Currently experiencing high demand.

Current lead time: 2–3 weeks. All units are assembled in San Francisco. If you need it sooner, please reach out at founders@pamir.ai.

Pocket Computer For

AI Agents

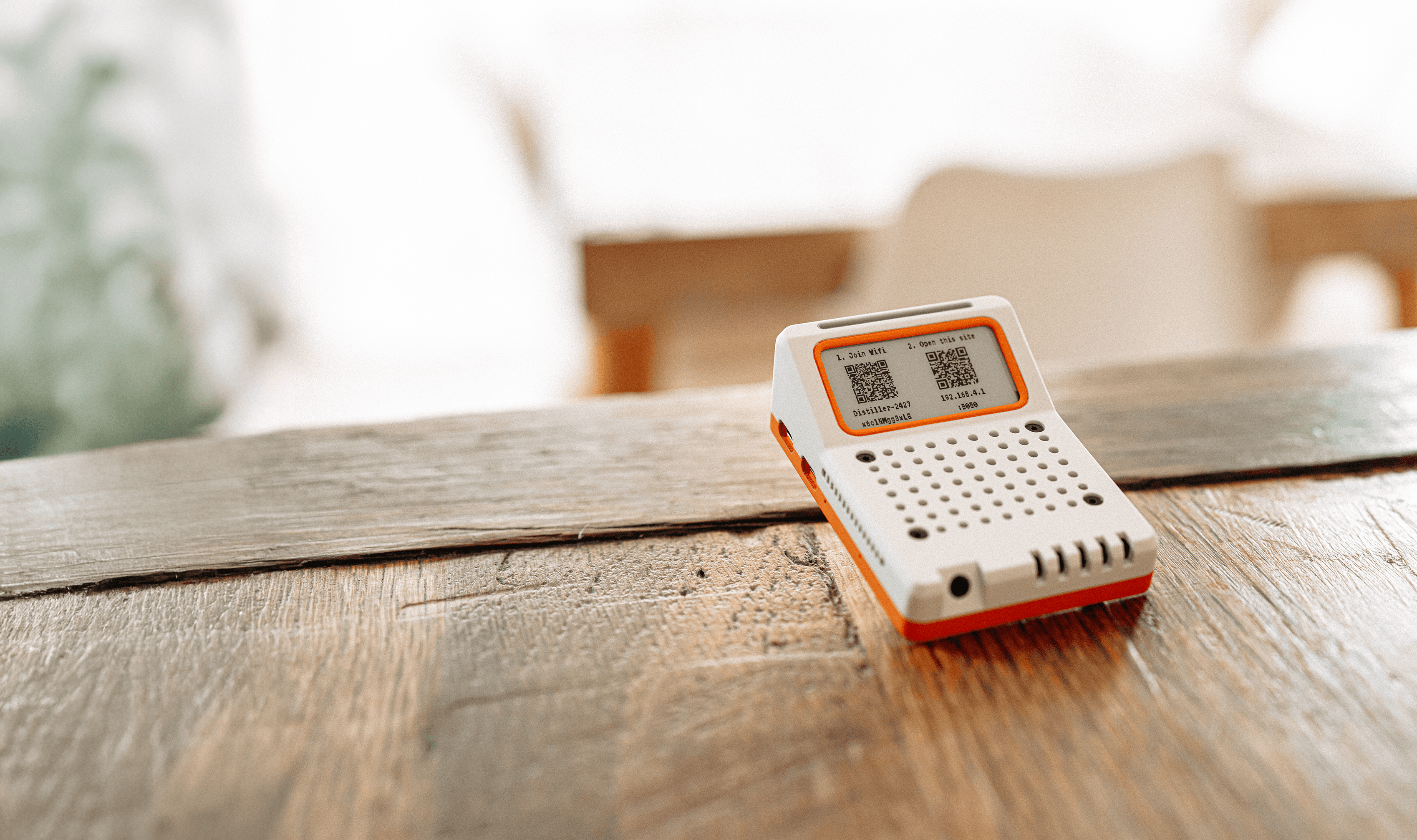

A dedicated linux computer for AI Agents. Optimized for 24/7 tasks, and remote access.

Distiller Agent Edition

Distiller Agent Layer

Custom linux layer that optimized for AI Agent like claude code, full OS and hardware context.

Distiller Hardware Layer

Enable your AI agent to see and control every protocol and interface. Auto flash and test.

· UART · GPIO · PWM

32GB eMMC*

Wi‑Fi®

2.4 GHz

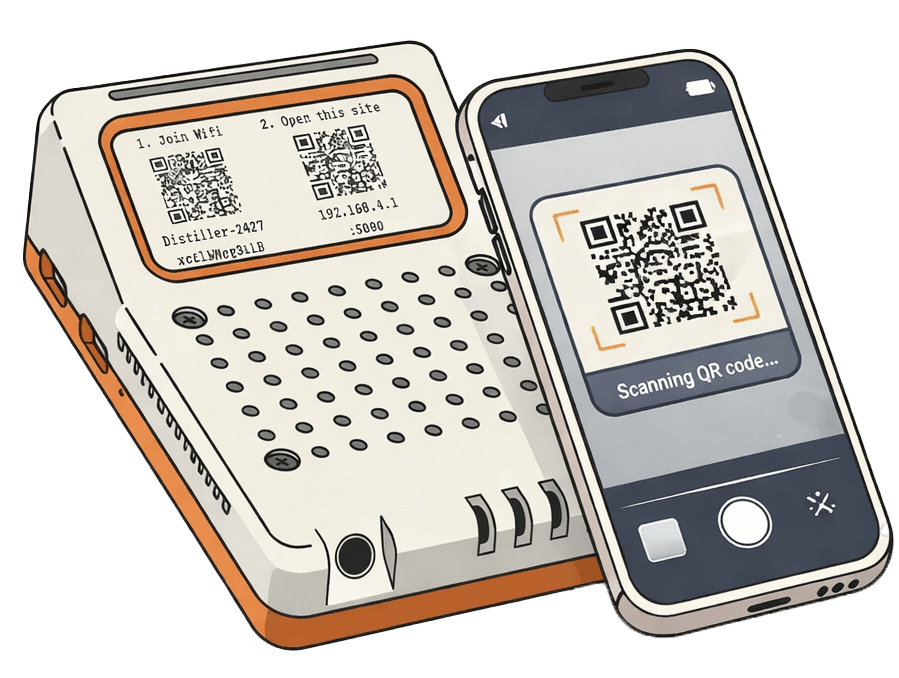

Access from anywhere.

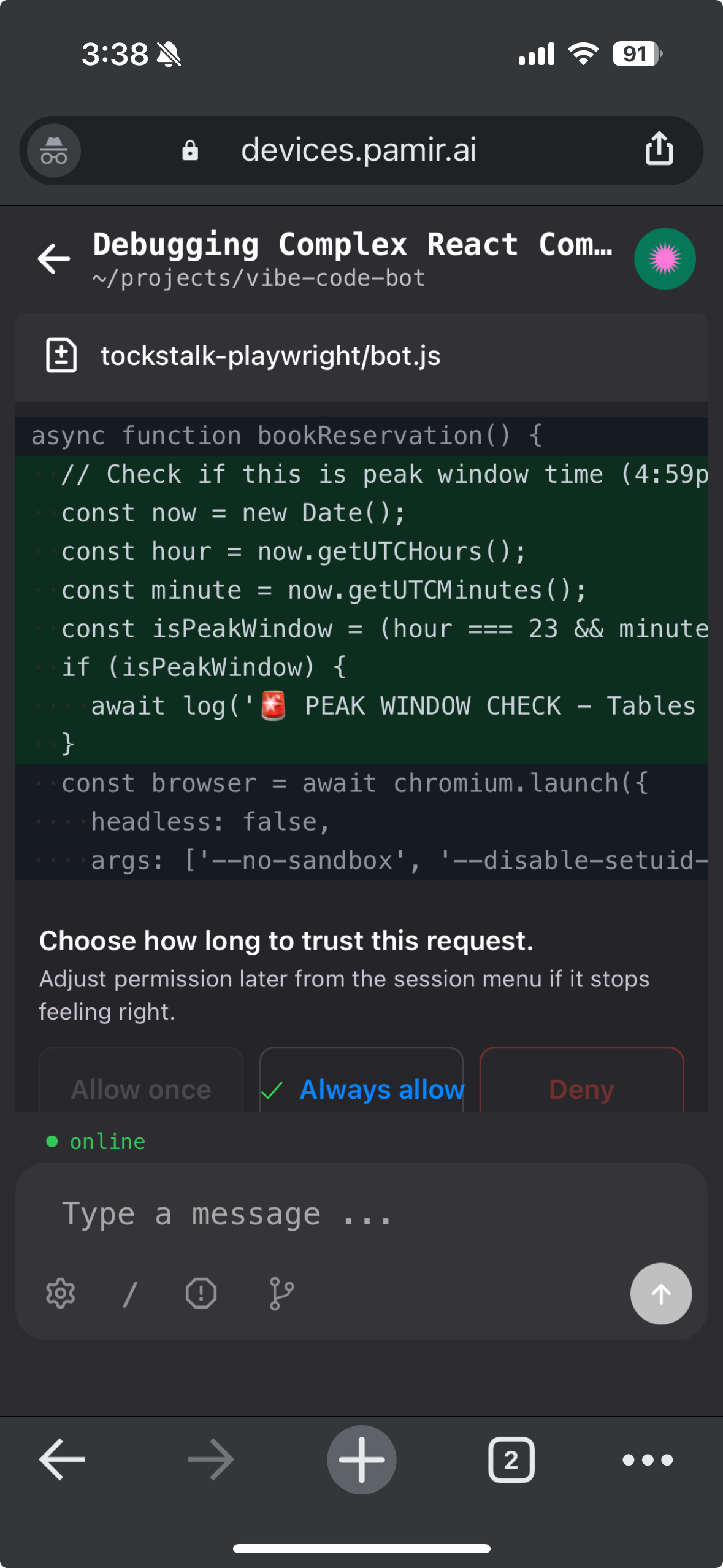

Open VS Code in your browser — full remote development with Claude Code, no install needed. Scan the QR, start coding from any device.

Prefer native? The Distiller iOS app (beta) lets you control your agent from your phone. Desktop app coming soon.

Software / DevOps

Developers who need 24/7 AI coding assistance and remote development capabilities.

Writeup on @pamir_ai"s ai coding assistant the Distiller alpha. Very cool retro futuristic vibes. https://t.co/R8gZ09ssla

— colonel panic (@_colonel_panic) October 10, 2025

@pamir_ai made it so easy for me to run yolo on this arducam by hooking it up to claude pic.twitter.com/fssziQcL6u

— Dong Anh (@_aaanh_) July 27, 2025

Firmware / Embedded

Hardware engineers who need to flash, test, and iterate on embedded systems.

Indie hackers / On-the-go

Builders who are not experts in software or hardware, but want to build with hardware.

Help us build the future

We're building the first computer designed for AI agents. We don't have all the answers yet — but we know we can't figure it out alone.

Distiller Alpha is an Open Alpha version — a limited run. Every unit sold teaches us something. You help us shape the Agent Computer that's actually useful, to be released later this year.

Looking for Clawdbot?

Also available pre-installed. Same hardware, same price.

New batch every month

- Feb

- Mar

- Apr

- May

- Jun

Currently shipping March batch, USA only. if you live outside of the US.